Artificial intelligence (AI) systems have become increasingly prevalent in today’s society, with AI assistants and search engines being just a few examples of their applications. One of the most common approaches to building AI systems is through the use of artificial neural networks (ANNs), which are inspired by the structure of the human brain. However, like their biological counterparts, ANNs are not infallible and can sometimes be deceived or confused by certain inputs.

Unlike humans, ANNs can misinterpret seemingly normal inputs, leading to potential errors in decision-making. For example, an image-classifying system may mistake a cat for a dog, or a driverless car may misinterpret a stop signal as a right-of-way sign. These errors can have significant consequences, especially in sensitive applications such as medical diagnostics or autonomous vehicles.

To protect ANNs from adversarial attacks, various defense techniques have been developed. One common defense strategy involves introducing noise into the input layer, which can help the network better adapt to varying inputs. However, this approach has its limitations and may not be effective against certain types of attacks.

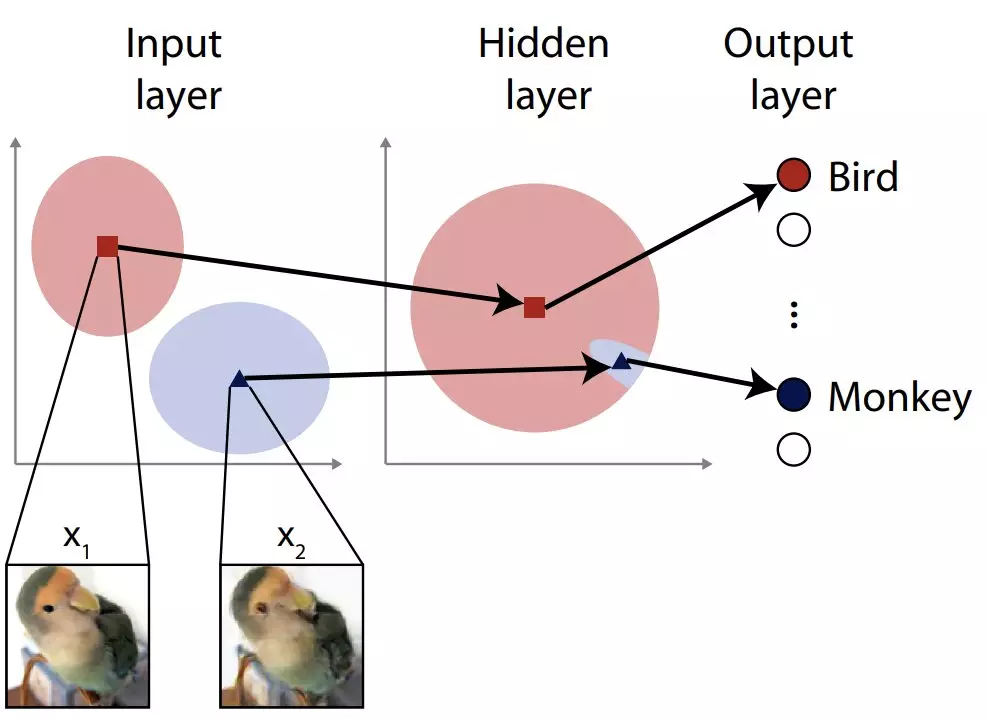

Researchers at the University of Tokyo Graduate School of Medicine, Jumpei Ukita and Professor Kenichi Ohki, sought to improve the resilience of ANNs by looking beyond the input layer. Drawing inspiration from their studies of the human brain, they devised a method that adds noise not only to the input layer but also to deeper layers of the network.

Ukita and Ohki coined the term “feature-space adversarial examples” to describe their hypothetical method of attack. Unlike traditional attacks that target the input layer, these attacks aim to mislead the deeper layers of the ANN. The researchers demonstrated that such attacks pose a significant threat, and thus, injected random noise into the hidden layers to enhance their adaptability and defensive capabilities.

Contrary to conventional wisdom, the addition of noise to the deeper layers of the network did not hinder its performance under normal conditions. Instead, it promoted greater adaptability, reducing the susceptibility to adversarial attacks. This innovative approach shows promise in enhancing the resilience of ANNs and mitigating the impact of malicious manipulations.

While the results of this study are promising, Ukita and Ohki acknowledge that further development is necessary. The defense technique currently only protects against the specific kind of attack used in their research. Future attackers may employ different methods that can bypass the feature-space noise defense. Thus, the researchers are committed to refining their approach and making it more effective against a broader range of attacks.

Enhancing the resilience of AI systems is crucial for ensuring their reliability and preventing potential harm. As AI continues to permeate various aspects of society, from autonomous vehicles to medical diagnostics, the stakes are higher than ever. Researchers and practitioners must work together to develop robust defense techniques that can keep pace with evolving threats.

The study conducted by Ukita and Ohki sheds light on the importance of enhancing the resilience of artificial neural networks. By exploring inner layers and introducing noise, the researchers have demonstrated the potential to improve the network’s ability to withstand adversarial attacks. This research not only contributes to the field of AI security but also underscores the need for continued advancements in defense techniques for AI systems. As technology continues to advance, it is imperative that we prioritize the development of robust and resilient AI systems that can be trusted in critical applications.

Leave a Reply